Blogpost by Cas Goos

The goal of our organization, the Platform for Young Meta-Scientists (PYMS), is to bring together Early Career Researchers (ECRs) working on meta-science, while providing a place to network with peers and discuss research. We consider this an important initiative as ECRs have been at the forefront of many reform initiatives, and because despite the increasing number of meta-science ECRs, many still work disconnected from other researchers with shared interests. Bringing ECRs together is a crucial step in enabling large scale research and strengthening reform initiatives that are key to improving science.

The PYMS Meeting

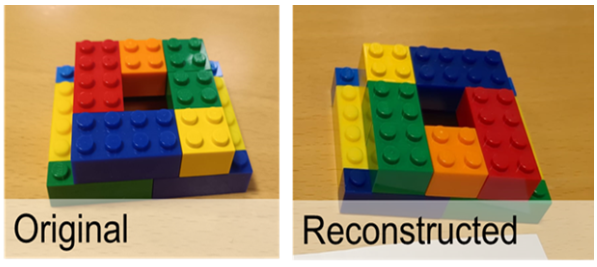

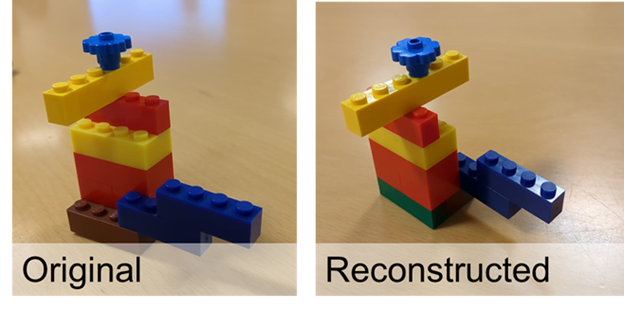

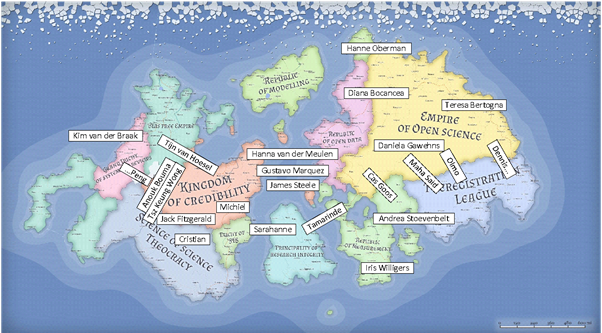

To further these goals within the most recent PYMS meeting, which took place at the UMCG on December 5th 2024 as a pre-symposium to the NLRN symposium on December 6th, we invited an audience of ECRs from a variety of backgrounds. We are glad to have achieved a broad representation of ECRs, including from abroad. Presentations span a variety of meta-science topics such as spin, equivalence testing, and reproducibility. Not only work from the social sciences, but also work in computational sciences, sports and exercise science, and nanobioscience were presented and discussed.

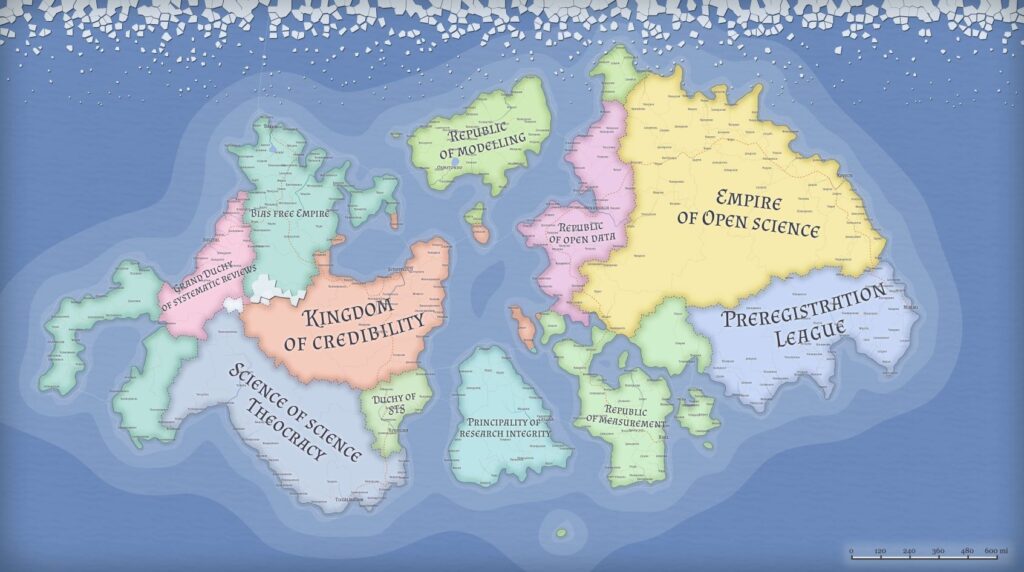

Map of PYMS attendees and their areas of expertise/interest. Map backdrop was constructed by Tamarinde Haven.

Furthermore, during the meeting we created many opportunities for discussion and networking, including invitations for collaborations, throughout the day.

We were also fortunate enough to have Tracey Weissgerber give a keynote talk on having a career in meta-science followed by a series of provocative statements on the same topic. Both led to a practically informative discussion for the attendees on having a career in meta-science.

The Future of PYMS

Since the end of last year, the board for PYMS has been renewed. Four new ECRs have joined the board to carry the torch. The new members are Sajedeh Rasti from Eindhoven University, Raphael Merz from Ruhr University Bochum, and Anouk Bouma and myself from Tilburg University. Like the previous board, we plan to continue creating a community for meta-science ECRs with informal networking opportunities. However, we also plan to expand the reach of PYMS in new ways.

As a first step, PYMS will go international during our next meeting. In this way we expand our networking beyond the Netherlands to prompt broader collaboration in our field. This is especially important here, since projects requiring large investments of time and effort from multiple parties are of crucial importance to investigate and improve scientific practice effectively. This meeting will be held this summer, there will be plenty of opportunities to discuss future projects and financial compensation for international presenters to encourage a broad representation of young meta-scientists.

Interested ECRs can join our mailing list and get a link through there to our Discord server to connect and communicate with fellow ECRs on meta-science, as well as keep up to date about future events, including the next PYMS meeting this summer.